Predictive Models Assignment Help Using Pyspark - Default of Credit Card Clients

- Pushkar Nandgaonkar

- Aug 20, 2021

- 3 min read

Updated: Mar 29, 2022

Codersarts is a top rated website for Predictive model Assignment Help Using Pyspark, Project Help, Homework Help, Coursework Help and Mentorship. Our dedicated team of Machine learning assignment experts will help and guide you throughout your Machine learning journey.

In this Article we are going to analyze Default credit card clients data and Build the Predictive model using Pyspark. The objective of this predictive model is to predict the chance of customers defaulting payment on their credit card.

We have a dataset which is also available on kaggle and UCI Machine Learning Repository. This dataset contains the information on default payments, demographic factors, credit data, history of payment, and bill statements of credit card clients in Taiwan from April 2005 to September 2005.

Attributes Information

ID: ID of each client

LIMIT_BAL: Amount of given credit in NT dollars (includes individual and family/supplementary credit

SEX: Gender (1=male, 2=female)

EDUCATION: (1=graduate school, 2=university, 3=high school, 4=others, 5=unknown, 6=unknown)

MARRIAGE: Marital status (1=married, 2=single, 3=others)

AGE: Age in years

PAY_0: Repayment status in September, 2005 (-1=pay duly, 1=payment delay for one month, 2=payment delay for two months, … 8=payment delay for eight months, 9=payment delay for nine months and above)

PAY_2: Repayment status in August, 2005 (scale same as above)

PAY_3: Repayment status in July, 2005 (scale same as above)

PAY_4: Repayment status in June, 2005 (scale same as above)

PAY_5: Repayment status in May, 2005 (scale same as above)

PAY_6: Repayment status in April, 2005 (scale same as above)

BILL_AMT1: Amount of bill statement in September, 2005 (NT dollar)

BILL_AMT2: Amount of bill statement in August, 2005 (NT dollar)

BILL_AMT3: Amount of bill statement in July, 2005 (NT dollar)

BILL_AMT4: Amount of bill statement in June, 2005 (NT dollar)

BILL_AMT5: Amount of bill statement in May, 2005 (NT dollar)

BILL_AMT6: Amount of bill statement in April, 2005 (NT dollar)

PAY_AMT1: Amount of previous payment in September, 2005 (NT dollar)

PAY_AMT2: Amount of previous payment in August, 2005 (NT dollar)

PAY_AMT3: Amount of previous payment in July, 2005 (NT dollar)

PAY_AMT4: Amount of previous payment in June, 2005 (NT dollar)

PAY_AMT5: Amount of previous payment in May, 2005 (NT dollar)

PAY_AMT6: Amount of previous payment in April, 2005 (NT dollar)

default.payment.next.month: Default payment (1=yes, 0=no)

Firstly Load the dataset into spark Data Frame.

Lets see statistical information of some attributes

We can see in the above screenshot, There are total 30000 distinct credit card clients. The average credit card Limit balance is 167484. Education level is mostly graduate school and university. Most of clients are single or married. Average age of client is 35 years with standard deviation of 9.2.

We can see below plot there are 6636 out of 30000 of clients will default next month.

Before the Data transformation step we need to ensure our dataset all the columns should be numerical and ensure the columns data type is integer. if it is not then convert it into an integer otherwise it may raise the error. In Our default credit card data all column data type is string so before data transform we change it to integer.

Now We need to transform the data to feed the data into a model. Data transformation is the process of converting data from one format to another format. Firstly we need to apply the Vector Assembler on the features column to convert all feature columns into one vector column. and then apply the Standard scaler on the vector column. Standard Scaler removes the mean and scales each feature/variable to unit variance. This operation is performed feature-wise in an independent way.

We can see in the following screenshot Vector column as features and Scaled data as Scaled_features.

Now our Data is ready to feed into the model. We split the data into a training set as 70% and a testing set. 30%. Splitting data is an important part of evaluating the data mining model. Because the testing dataset already contains known values for the attribute that you want to predict, it is easy to determine the model accuracy or whether the model is correct or not.

Now In this step we build machine learning model using the training set for training. Then, we will use the testing set for testing.

Decision Tree Classifier :

Test Error :

Random Forest Classifier :

Test Error

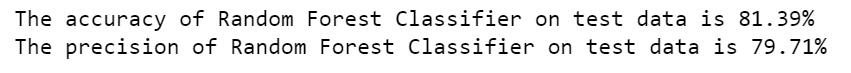

Accuracy and Precision Rate of Random Forest Classifier :

Logistic Regression :

Accuracy and Precision rate of Logistic Regression :

GBT Classifier :

Test Error :

Accuracy and precision Rate of GBT Classifier :

Conclusion :

GBT Classifier We give us best Result which is 82.06 %

Thank You

How Codersarts can Help you in Pyspark?

Codersarts provide:

Pyspark Assignment help

Pyspark Error Resolving Help

Mentorship in Pyspark from Experts

Pyspark Development Project

If you are looking for any kind of Help in Pyspark Contact us

Codersarts machine learning assignment help expert will provides best quality plagiarism free solution at affordable price. We are available 24 * 7 online to assist you. You may chat with us through website chat or email or can fill contact form.

Comments