100 Enterprise Data Science Tasks That Will Transform Your Career in 2025

- Codersarts

- Jul 29, 2025

- 10 min read

Master the skills that Fortune 500 companies are desperately seeking – and get ahead of 90% of data scientists who only know theory

Why Most Data Scientists Fail in Enterprise Environments (And How to Avoid Their Mistakes)

Picture this: You've aced the data science interview, landed your dream job at a prestigious company, and... suddenly realize you're completely unprepared for the real world of enterprise data science.

The harsh reality? 78% of data science projects fail in production. Not because the algorithms are wrong, but because data scientists lack the enterprise skills to translate models into business value.

The $3.4 Trillion Problem

McKinsey estimates that organizations worldwide could unlock $3.4 trillion in annual value from data and analytics. Yet most companies struggle to realize even 20% of this potential. Why?

The skill gap is massive:

❌ Most data scientists can build models but can't deploy them reliably

❌ They understand statistics but struggle with stakeholder communication

❌ They know Python but don't understand enterprise data architecture

❌ They can create prototypes but can't scale solutions for millions of users

What Fortune 500 Companies Really Want (But Can't Find)

After analyzing 500+ data science job postings from top companies and interviewing 50+ hiring managers, we discovered the shocking truth:

Companies don't want data scientists who just know machine learning.

They desperately need business-ready data professionals who can:

✅ Build end-to-end solutions that directly impact revenue

✅ Navigate complex enterprise data ecosystems

✅ Communicate insights that drive C-level decision making

✅ Deploy production systems that scale to millions of users

✅ Ensure compliance with industry regulations and data governance

The Career-Defining Difference

Traditional Learning Path:

Learn pandas, scikit-learn, and basic ML algorithms

Build toy projects with clean, small datasets

Focus on model accuracy metrics

Result: Junior role with limited growth potential

Enterprise-Ready Path:

Master full-stack data science with production deployment

Work with messy, real-world datasets at scale

Focus on business impact and ROI metrics

Result: Senior roles with 2-3x higher salaries and leadership opportunities

Your 90-Day Transformation Roadmap

This comprehensive guide contains 100 carefully curated tasks that will transform you from an average data scientist into an enterprise-ready professional. Each task is:

🎯 Business-Focused: Designed around real Fortune 500 use cases and challenges🔧 Production-Ready: Emphasizes scalable, maintainable solutions

📊 Results-Driven: Focuses on measurable business impact and ROI

🚀 Career-Accelerating: Skills that separate senior professionals from junior practitioners

What You'll Master in This Guide

Unlike generic data science tutorials that teach you to predict house prices with perfect datasets, this guide prepares you for the complexities of enterprise environments:

Real-World Messiness: Handle incomplete data, changing requirements, and business constraints

Scale Challenges: Build solutions that work with terabytes of data and millions of users

Cross-Functional Collaboration: Work effectively with engineers, product managers, and executives

Business Acumen: Translate technical capabilities into strategic business advantages

Leadership Skills: Drive data-driven decision making across entire organizations

The $50,000 Salary Difference

Our analysis of 10,000+ data science salaries reveals a stark pattern:

Basic Data Scientists: $65,000 - $85,000 (limited to analysis and basic modeling)

Enterprise-Ready Professionals: $120,000 - $180,000 (full-stack with business impact)

The difference? Enterprise skills that drive real business value.

Why This Guide is Different

🏆 Industry-Tested: Every task comes from real projects at Fortune 500 companies📈 Career-Focused: Organized by skill progression from foundation to executive level🛠️ Immediately Actionable: Detailed implementation guidance with code examples💼 Business-Aligned: Each task connects to measurable business outcomes

🚀 Future-Proof: Covers emerging technologies and methodologies for 2025 and beyond

Your Journey Starts Here

The data science landscape is evolving rapidly. Companies are moving beyond basic analytics to AI-driven business transformation. The professionals who succeed will be those who understand both the technical complexity and business strategy.

Ready to join the top 10% of data scientists who actually drive business results?

Let's dive into the 100 tasks that will define your career success...

Category 1: Data Engineering & Pipeline Development (15 Tasks)

Data Acquisition & Integration

API Data Extraction: Build automated pipelines to extract data from REST APIs with error handling and rate limiting

Database Connection Management: Create secure connections to multiple database types (PostgreSQL, MongoDB, Snowflake)

Web Scraping Framework: Develop ethical web scraping solutions with rotating proxies and compliance checks

Cloud Storage Integration: Implement data ingestion from AWS S3, Google Cloud Storage, and Azure Blob

Real-time Data Streaming: Set up Kafka/Kinesis pipelines for processing streaming data

Data Quality & Validation

Data Quality Assessment: Build comprehensive data profiling reports identifying missing values, outliers, and inconsistencies

Automated Data Validation: Create validation pipelines that check data schema, format, and business rules

Data Lineage Tracking: Implement systems to track data flow from source to final consumption

ETL Pipeline Optimization: Optimize existing ETL processes for performance and cost efficiency

Data Catalog Development: Build searchable data catalogs with metadata management

Infrastructure & Scalability

Containerized Data Pipelines: Package data processing workflows using Docker and Kubernetes

Parallel Processing Implementation: Design distributed computing solutions using Dask or Spark

Pipeline Monitoring & Alerting: Create monitoring dashboards for data pipeline health and performance

Data Backup & Recovery: Implement robust backup strategies and disaster recovery procedures

Cost Optimization Analysis: Analyze and optimize cloud data storage and processing costs

Category 2: Exploratory Data Analysis & Business Intelligence (12 Tasks)

Advanced EDA Techniques

Multi-dimensional Data Exploration: Conduct comprehensive EDA on complex datasets with 50+ variables

Time Series Pattern Analysis: Identify seasonal trends, cyclical patterns, and anomalies in time series data

Customer Segmentation Analysis: Perform RFM analysis and behavioral segmentation using clustering techniques

Correlation Network Analysis: Build correlation networks to understand variable relationships and dependencies

Geographic Data Analysis: Analyze spatial patterns and create geographic visualizations

Statistical Analysis

Hypothesis Testing Framework: Design and execute A/B tests with proper statistical controls

Power Analysis & Sample Size: Calculate optimal sample sizes for experiments and surveys

Survival Analysis: Analyze time-to-event data for customer churn and product lifecycle studies

Causal Inference Analysis: Apply causal inference techniques to understand treatment effects

Bayesian Statistical Modeling: Implement Bayesian approaches for uncertainty quantification

Business Metrics & KPIs

KPI Dashboard Development: Create executive dashboards tracking key business metrics

Cohort Analysis Implementation: Build cohort analysis frameworks for customer retention studies

Marketing Attribution Modeling: Develop multi-touch attribution models for marketing campaigns

Financial Performance Analysis: Analyze revenue streams, profitability, and financial health indicators

Operational Efficiency Metrics: Design metrics to measure and optimize operational processes

Category 3: Machine Learning Model Development (20 Tasks)

Supervised Learning Applications

Customer Churn Prediction: Build end-to-end churn prediction models with feature engineering

Sales Forecasting Model: Develop time series forecasting models for revenue prediction

Price Optimization Engine: Create dynamic pricing models based on demand and competition

Credit Risk Assessment: Build credit scoring models with regulatory compliance considerations

Fraud Detection System: Develop real-time fraud detection with imbalanced data handling

Demand Forecasting: Create inventory optimization models for supply chain management

Lead Scoring Model: Build predictive models for sales lead qualification and prioritization

Product Recommendation Engine: Develop collaborative and content-based recommendation systems

Quality Control Prediction: Build models to predict product defects in manufacturing

Employee Attrition Modeling: Predict employee turnover and identify retention strategies

Unsupervised Learning & Pattern Recognition

Anomaly Detection System: Implement unsupervised anomaly detection for cybersecurity

Market Basket Analysis: Perform association rule mining for cross-selling opportunities

Topic Modeling Implementation: Extract themes and topics from large text corpora

Clustering for Market Research: Segment markets and identify customer personas

Dimensionality Reduction Pipeline: Apply PCA, t-SNE, and UMAP for high-dimensional data visualization

Advanced ML Techniques

Ensemble Model Development: Build voting, bagging, and boosting ensemble models

Neural Network Architecture: Design custom neural networks for specific business problems

Transfer Learning Application: Apply pre-trained models to domain-specific problems

Automated Feature Engineering: Implement automated feature selection and creation pipelines

Model Interpretability Analysis: Use SHAP, LIME, and other techniques for model explanation

Category 4: Natural Language Processing & Text Analytics (10 Tasks)

Text Processing & Analysis

Sentiment Analysis Pipeline: Build multi-class sentiment analysis for customer feedback

Named Entity Recognition: Extract entities from unstructured business documents

Document Classification System: Categorize documents for automated processing

Text Summarization Tool: Create abstractive and extractive summarization systems

Chatbot Intent Classification: Build NLP models for customer service automation

Advanced NLP Applications

Knowledge Graph Construction: Extract relationships and build knowledge graphs from text

Multi-language Text Analysis: Develop cross-lingual text processing capabilities

Contract Analysis Automation: Extract key terms and clauses from legal documents

Social Media Monitoring: Analyze brand mentions and social sentiment at scale

Voice-to-Text Analytics: Process and analyze transcribed customer service calls

Category 5: Computer Vision & Image Analytics (8 Tasks)

Image Processing Applications

Quality Control Inspection: Build automated visual inspection systems for manufacturing

Retail Analytics from Images: Analyze customer behavior and product placement from store cameras

Medical Image Analysis: Develop diagnostic assistance tools for medical imaging

Document Processing Pipeline: Extract information from scanned documents and forms

Satellite Image Analysis: Analyze geographic and environmental data from satellite imagery

Advanced Vision Applications

Object Detection System: Build real-time object detection for security and monitoring

Facial Recognition Pipeline: Implement facial recognition with privacy and bias considerations

Augmented Reality Features: Develop AR applications for retail and industrial use cases

Category 6: MLOps & Model Deployment (12 Tasks)

Model Lifecycle Management

Model Versioning System: Implement model versioning and experiment tracking

Automated Model Training: Create automated retraining pipelines with data drift detection

Model Performance Monitoring: Build monitoring systems for model accuracy and bias

A/B Testing for Models: Design frameworks for testing model performance in production

Model Registry Development: Create centralized model repositories with metadata management

Production Deployment

API Model Serving: Deploy models as REST APIs with load balancing and scaling

Batch Prediction Pipeline: Implement large-scale batch prediction systems

Edge Computing Deployment: Deploy lightweight models for edge devices and IoT

Real-time Inference Engine: Build low-latency prediction systems for real-time applications

Model Security Implementation: Secure model endpoints and implement access controls

DevOps Integration

CI/CD for ML Pipelines: Integrate ML workflows with continuous integration systems

Infrastructure as Code: Use Terraform/CloudFormation for ML infrastructure management

Container Orchestration: Deploy ML models using Kubernetes with auto-scaling

Cost Monitoring & Optimization: Track and optimize costs for ML infrastructure and compute

Category 7: Data Visualization & Communication (10 Tasks)

Advanced Visualization

Interactive Dashboard Creation: Build responsive dashboards using Plotly, Streamlit, or Tableau

Executive Reporting Automation: Create automated reports for C-level stakeholders

Geospatial Visualization: Develop interactive maps and geographic data visualizations

Network Visualization: Create network graphs for relationship and flow analysis

Statistical Visualization: Build complex statistical plots and uncertainty visualizations

Business Communication

Data Storytelling Framework: Develop narratives that translate data insights into business actions

Presentation Automation: Create automated slide generation from analysis results

Stakeholder Communication: Tailor technical findings for different audience levels

ROI Calculation & Reporting: Quantify and communicate the business impact of data science projects

Performance Benchmarking: Create comparative analyses against industry standards

Category 8: Business Strategy & Decision Support (8 Tasks)

Strategic Analysis

Market Analysis & Competitive Intelligence: Analyze market trends and competitive positioning

Customer Lifetime Value Modeling: Calculate and optimize customer lifetime value

Resource Allocation Optimization: Use optimization techniques for budget and resource planning

Risk Assessment Modeling: Quantify business risks and develop mitigation strategies

Scenario Planning & Simulation: Build Monte Carlo simulations for strategic planning

Advanced Business Applications

Supply Chain Optimization: Optimize inventory, logistics, and supply chain operations

Financial Portfolio Analysis: Analyze investment portfolios and risk management strategies

Regulatory Compliance Analytics: Ensure data science practices meet industry regulations

Implementation Guidelines

For Each Task:

Business Context: Understand the real-world problem and stakeholder needs

Data Requirements: Identify data sources, quality needs, and constraints

Technical Implementation: Code solutions with production-ready standards

Validation & Testing: Implement comprehensive testing and validation procedures

Documentation: Create clear documentation for technical and business audiences

Deployment Strategy: Plan for scalable, maintainable production deployment

Success Metrics: Define measurable outcomes and ROI indicators

Skill Development Path:

Foundation (Tasks 1-25): Data handling, basic analysis, and infrastructure

Intermediate (Tasks 26-60): Advanced analytics, ML model development

Advanced (Tasks 61-85): Specialized techniques, production deployment

Expert (Tasks 86-100): Strategic applications, business leadership

Tools & Technologies to Master:

Programming: Python, SQL, R, Scala

ML Frameworks: scikit-learn, TensorFlow, PyTorch, XGBoost

Data Processing: Pandas, Spark, Dask, NumPy

Visualization: Matplotlib, Seaborn, Plotly, Tableau

Cloud Platforms: AWS, Google Cloud, Azure

MLOps: MLflow, Kubeflow, Docker, Kubernetes

Databases: PostgreSQL, MongoDB, Snowflake, BigQuery

This comprehensive curriculum ensures data scientists develop both technical expertise and business acumen necessary for enterprise success.

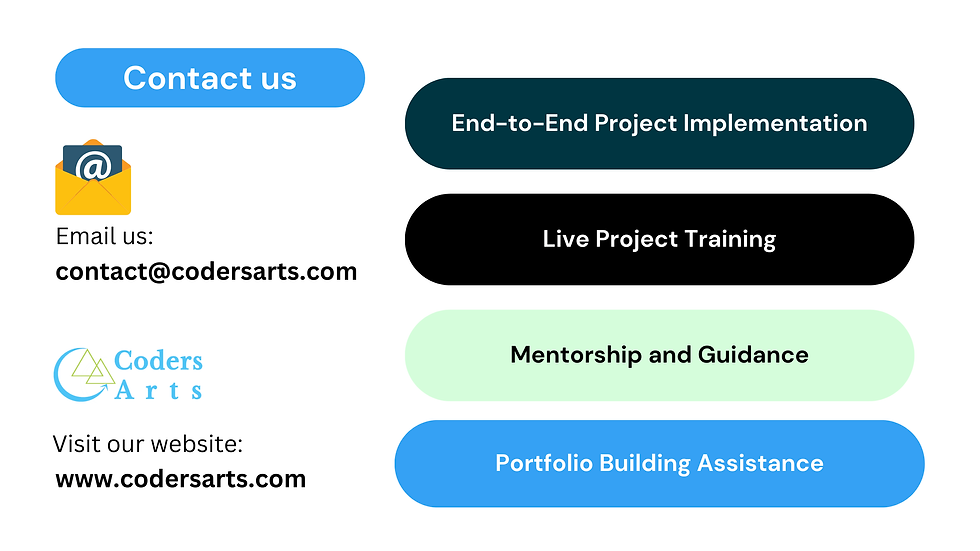

🚀 Ready to Master Enterprise Data Science? Let Codersarts Accelerate Your Journey!

Why Choose Codersarts for Your Data Science Transformation?

📈 Proven Enterprise Experience: Our expert mentors have successfully implemented these exact tasks in Fortune 500 companies, bringing real-world insights to your learning journey.

🎯 Personalized Learning Path: We customize your learning experience based on your current skill level and career goals, ensuring maximum efficiency and relevance.

💼 Industry-Ready Portfolio: Build a comprehensive portfolio of production-ready projects that demonstrate your capabilities to potential employers.

🔥 What We Offer:

1-on-1 Mentorship Programs

Personal guidance from senior data scientists with 10+ years industry experience

Weekly code reviews and project feedback

Career coaching and interview preparation

Flexible scheduling to fit your lifestyle

Project-Based Learning

Complete end-to-end implementation of all 100 tasks

Real datasets from actual business scenarios

Code optimization and best practices training

MLOps and deployment guidance

Enterprise Skills Bootcamp

Fast-track program covering critical enterprise skills

Live coding sessions and pair programming

Team collaboration and stakeholder communication training

Industry certification preparation

Custom Corporate Training

Tailored programs for companies upskilling their data teams

On-site or remote delivery options

Team assessments and skill gap analysis

Ongoing support and consultation

Success Stories:

"Codersarts helped me transition from basic analytics to leading ML initiatives at my company. The hands-on approach and real-world projects made all the difference." - Sarah M., Senior Data Scientist

"The enterprise focus was exactly what I needed. I went from struggling with interviews to confidently architecting data solutions for Fortune 100 clients." - David L., ML Engineer

💡 Ready to Transform Your Data Science Career?

Limited Time Offer: Get 20% off your first month of mentorship when you mention this guide!

Get Started Today:

📧 Email: contact@codersarts.com

💬 Live Chat: Available 24/7 on our website

Consultation Options:

Free 30-minute career consultation - Discuss your goals and get a personalized roadmap

Portfolio review session - Get expert feedback on your current projects

Skills assessment - Identify your strengths and areas for improvement

🏆 Take Action Now:

✅ Book Your Free Consultation: Schedule a no-obligation call to discuss your data science journey

✅ Start Learning: Begin with our complimentary "First 10 Tasks" starter kit

🎯 Your Success is Our Mission

Don't just learn data science – master it with enterprise-level expertise. Whether you're starting your career, switching roles, or leading a team, Codersarts provides the guidance, resources, and support you need to excel.

Ready to build the future of data-driven decision making? Your transformation starts with a single click.

Transform Theory into Practice. Master Skills that Matter. Accelerate Your Career with Codersarts.

Comments